ELK即Elasticsearch、Logstash、Kibana,组合起来可以搭建线上日志系统

E、L、K分别的作用是什么?

- Elasticsearch:用于存储收集到的日志信息;

- Logstash:用于收集日志,SpringBoot应用整合了Logstash以后会把日志发送给Logstash,Logstash再把日志转发给Elasticsearch;

- Kibana:通过Web端的可视化界面来查看日志

使用Docker Compose 搭建ELK环境

需要下载的Docker镜像

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

| docker pull elasticsearch:6.4.0

StevendeMacBook-Pro:~ Steven$ docker pull elasticsearch:6.4.0

6.4.0: Pulling from library/elasticsearch

256b176beaff: Pull complete

6cb472d8b34a: Pull complete

3a4f4d86c1c1: Pull complete

d185a64a7692: Pull complete

bc4d63245d6d: Pull complete

c8e97292a30a: Pull complete

c1c1a056e791: Pull complete

69b6c77c3236: Pull complete

Digest: sha256:96345f6d73c0d615ddf8b9683848d625f211efacfe3b5b8765debbd1eebc5663

Status: Downloaded newer image for elasticsearch:6.4.0

docker.io/library/elasticsearch:6.4.0

StevendeMacBook-Pro:~ Steven$

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

| docker pull logstash:6.4.0

StevendeMacBook-Pro:~ Steven$ docker pull logstash:6.4.0

6.4.0: Pulling from library/logstash

256b176beaff: Already exists

5e56485c9087: Pull complete

0313d3f864fa: Pull complete

024adf56c5cb: Pull complete

6209f79813e7: Pull complete

bfdc6f5b31f4: Pull complete

d4236779f9f4: Pull complete

53308bf9b138: Pull complete

c73dc1bb658a: Pull complete

6377a5f3ecf8: Pull complete

ceddac4268d5: Pull complete

Digest: sha256:d9ac381040d68f388be95e2b8a51631f06c0533c47e6593dd62950c2e2226e43

Status: Downloaded newer image for logstash:6.4.0

docker.io/library/logstash:6.4.0

StevendeMacBook-Pro:~ Steven$

|

1

| docker pull kibana:6.4.0

|

以上准备工作做好之后,进行下一步

配置

这里需要创建一个存放logstash配置的目录并上传配置文件

1

| StevendeMacBook-Pro:data Steven$ mkdir /Users/Steven/data/logstash

|

logstash-springboot.conf配置文件,文件内容如下:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

| input {

tcp {

mode => "server"

host => "0.0.0.0"

port => 4560

codec => json_lines

}

}

output {

elasticsearch {

hosts => "es:9200"

index => "springboot-logstash-%{+YYYY.MM.dd}"

}

}

|

将logstash-springboot.conf文件上传到/Users/Steven/data/logstash目录下

使用docker-compose.yml脚本启动ELK服务

创建docker-compose.yml配置文件,并且上传到目录(可以随意目录,具体看你执行docker相关命令的配置或者目录结构)

docker-compose.yml配置文件,文件内容如下:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

| version: '3'

services:

elasticsearch:

image: elasticsearch:6.4.0

container_name: elasticsearch

environment:

- "cluster.name=elasticsearch" #设置集群名称为elasticsearch

- "discovery.type=single-node" #以单一节点模式启动

- "ES_JAVA_OPTS=-Xms512m -Xmx512m" #设置使用jvm内存大小

volumes:

- /Users/Steven/data/elasticsearch/plugins:/usr/share/elasticsearch/plugins #插件文件挂载

- /Users/Steven/data/elasticsearch/data:/usr/share/elasticsearch/data #数据文件挂载

ports:

- 9200:9200

kibana:

image: kibana:6.4.0

container_name: kibana

links:

- elasticsearch:es #可以用es这个域名访问elasticsearch服务

depends_on:

- elasticsearch #kibana在elasticsearch启动之后再启动

environment:

- "elasticsearch.hosts=http://es:9200" #设置访问elasticsearch的地址

ports:

- 5601:5601

logstash:

image: logstash:6.4.0

container_name: logstash

volumes:

- /Users/Steven/data/logstash/logstash-springboot.conf:/usr/share/logstash/pipeline/logstash.conf #挂载logstash的配置文件

depends_on:

- elasticsearch #kibana在elasticsearch启动之后再启动

links:

- elasticsearch:es #可以用es这个域名访问elasticsearch服务

ports:

- 4560:4560

|

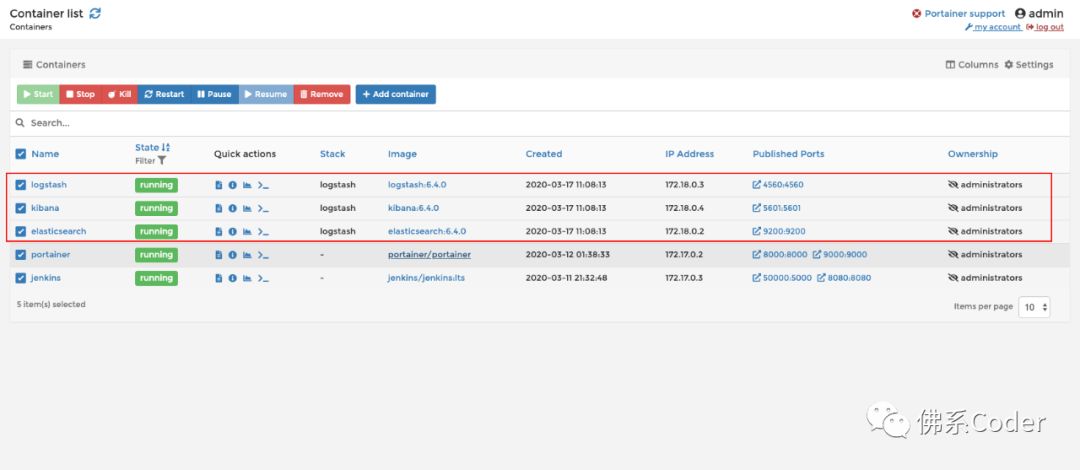

使用docker-compose up -d命令启动

注意,这里我发现我还没有pull kibana的镜像,但是不影响,也会帮我下载,也不影响流程

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

| StevendeMacBook-Pro:logstash Steven$ docker-compose up -d

Creating network "logstash_default" with the default driver

Pulling kibana (kibana:6.4.0)...

6.4.0: Pulling from library/kibana

256b176beaff: Already exists

88643bded09c: Pull complete

a5ecb9cf4fd5: Pull complete

ac1b01213c5c: Pull complete

3a29336659e0: Pull complete

42b8cd0c698d: Pull complete

13c336562991: Pull complete

5f61543bfc87: Pull complete

90e0671f0205: Pull complete

Digest: sha256:8e27f3f6051acc8542ff87b42a48cebfc0289514143aeb9db025a855712dabb4

Status: Downloaded newer image for kibana:6.4.0

Creating elasticsearch ... done

Creating logstash ... done

Creating kibana ... done

StevendeMacBook-Pro:logstash Steven$

|

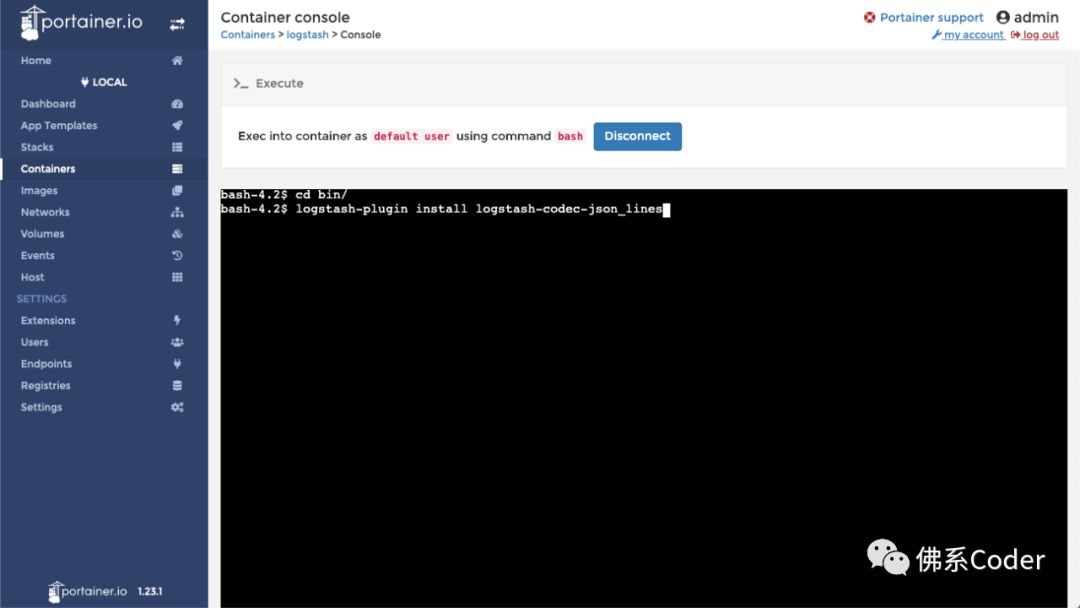

在logstash中安装json_lines插件

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

| # 进入logstash容器

docker exec -it logstash /bin/bash

# 进入bin目录

cd /bin/

# 安装插件

logstash-plugin install logstash-codec-json_lines

# 退出容器

exit

# 重启logstash服务

docker restart logstash

StevendeMacBook-Pro:logstash Steven$ docker exec -it logstash /bin/bash

bash-4.2$ cd bin/

bash-4.2$ logstash-plugin install logstash-codec-json_lines

Validating logstash-codec-json_lines

Installing logstash-codec-json_lines

Installation successful

bash-4.2$ exit

exit

StevendeMacBook-Pro:logstash Steven$ docker restart logstash

logstash

StevendeMacBook-Pro:logstash Steven$

|

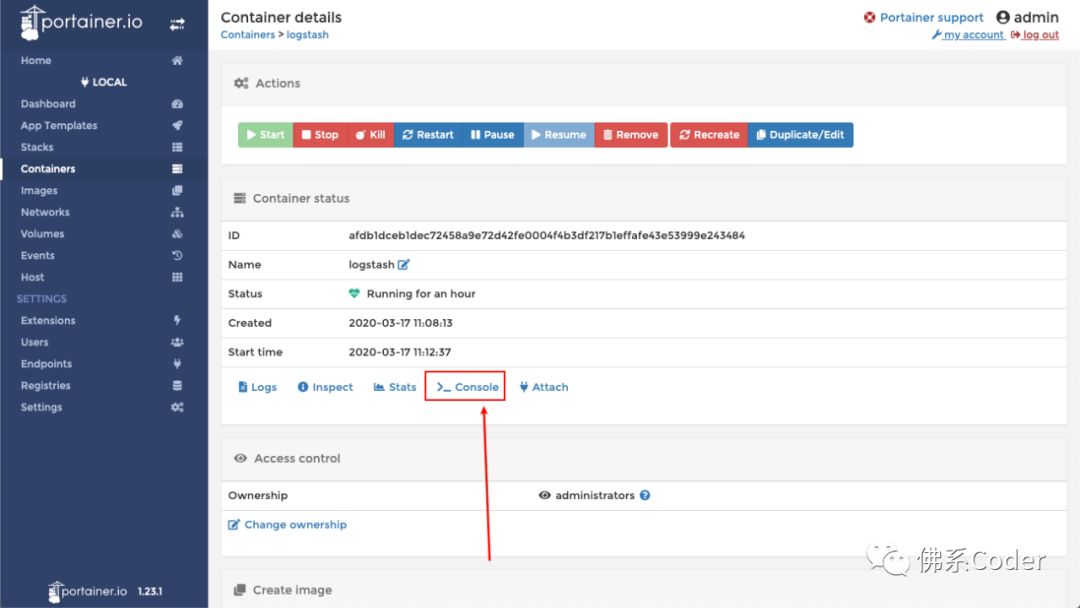

或者直接到Portainer上进入命令行直接安装

访问http://localhost:5601/,即可查看

SpringBoot应用集成Logstash

在pom.xml中添加logstash-logback-encoder依赖

1

2

3

4

5

6

|

<dependency>

<groupId>net.logstash.logback</groupId>

<artifactId>logstash-logback-encoder</artifactId>

<version>5.3</version>

</dependency>

|

添加配置文件logback-spring.xml让logback的日志输出到logstash

注意appender节点下的destination需要改成你自己的logstash服务地址,比如我的是:127.0.0.1:4560 。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

| <?xml version="1.0" encoding="UTF-8"?>

<configuration>

<contextName>logback</contextName>

<springProperty name="logging.file.path" source="logging.file.path" defaultValue="/Users/Steven/JavaProjectLogs"/>

<conversionRule conversionWord="clr" converterClass="org.springframework.boot.logging.logback.ColorConverter"/>

<conversionRule conversionWord="wex"

converterClass="org.springframework.boot.logging.logback.WhitespaceThrowableProxyConverter"/>

<conversionRule conversionWord="wEx"

converterClass="org.springframework.boot.logging.logback.ExtendedWhitespaceThrowableProxyConverter"/>

<property name="CONSOLE_LOG_PATTERN"

value="${CONSOLE_LOG_PATTERN:-%clr(%d{yyyy-MM-dd HH:mm:ss.SSS}){faint} %clr(${LOG_LEVEL_PATTERN:-%5p}) %clr(${PID:- }){magenta} %clr(---){faint} %clr([%15.15t]){faint} %clr(%-40.40logger{39}){cyan} %clr(:){faint} %m%n${LOG_EXCEPTION_CONVERSION_WORD:-%wEx}}"/>

<appender name="CONSOLE" class="ch.qos.logback.core.ConsoleAppender">

<filter class="ch.qos.logback.classic.filter.ThresholdFilter">

<level>debug</level>

</filter>

<encoder>

<Pattern>${CONSOLE_LOG_PATTERN}</Pattern>

<charset>UTF-8</charset>

</encoder>

</appender>

<appender name="INFO_FILE" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${logging.file.path}/-eureka-server.log</file>

<encoder>

<pattern>%d{yyyy-MM-dd HH:mm:ss.SSS} [%thread] %-5level %logger{50} [%file : %line] - %msg%n</pattern>

<charset>UTF-8</charset>

</encoder>

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>${logging.file.path}/eureka-server-%d{yyyy-MM-dd}.%i.log</fileNamePattern>

<timeBasedFileNamingAndTriggeringPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedFNATP">

<maxFileSize>50MB</maxFileSize>

</timeBasedFileNamingAndTriggeringPolicy>

<maxHistory>7</maxHistory>

</rollingPolicy>

<filter class="ch.qos.logback.classic.filter.LevelFilter">

<level>info</level>

</filter>

</appender>

<appender name="LOGSTASH" class="net.logstash.logback.appender.LogstashTcpSocketAppender">

<destination>127.0.0.1:4560</destination>

<encoder charset="UTF-8" class="net.logstash.logback.encoder.LogstashEncoder"/>

</appender>

<logger name="com.jiniu.extdataintegration.dao.mapper" level="DEBUG">

<appender-ref ref="INFO_FILE"/>

<appender-ref ref="CONSOLE"/>

</logger>

<root level="info">

<appender-ref ref="CONSOLE"/>

<appender-ref ref="INFO_FILE"/>

<appender-ref ref="LOGSTASH"/>

</root>

</configuration>

|

上面的文件需要根据自己的文件设置,其实最主要的是这两块:

1

2

3

4

5

6

7

8

9

10

11

|

<appender name="LOGSTASH" class="net.logstash.logback.appender.LogstashTcpSocketAppender">

<destination>127.0.0.1:4560</destination>

<encoder charset="UTF-8" class="net.logstash.logback.encoder.LogstashEncoder"/>

</appender>

<root level="INFO">

<appender-ref ref="CONSOLE"/>

<appender-ref ref="FILE"/>

<appender-ref ref="LOGSTASH"/>

</root>

|

其他的按照自己的项目中的配置来就行

启动spring boot应用,那么启动的日志等所有信息都会被收集到

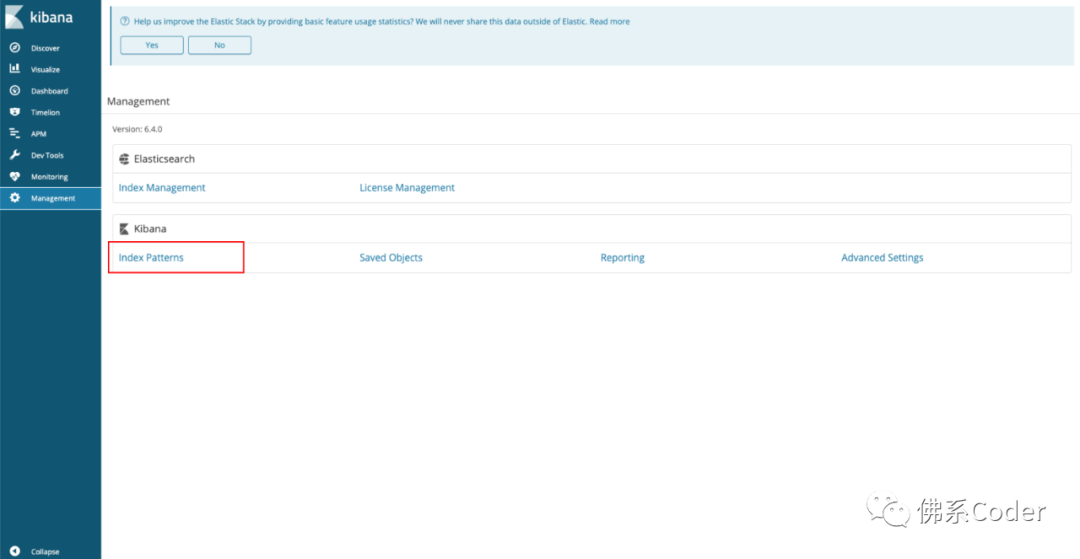

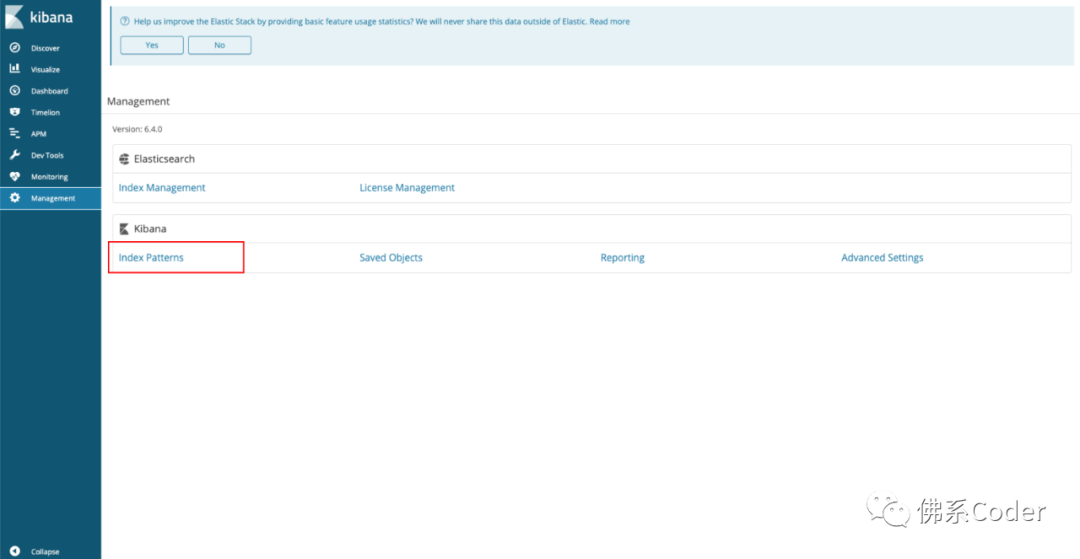

打开kibana,菜单选择Management,创建index pattern

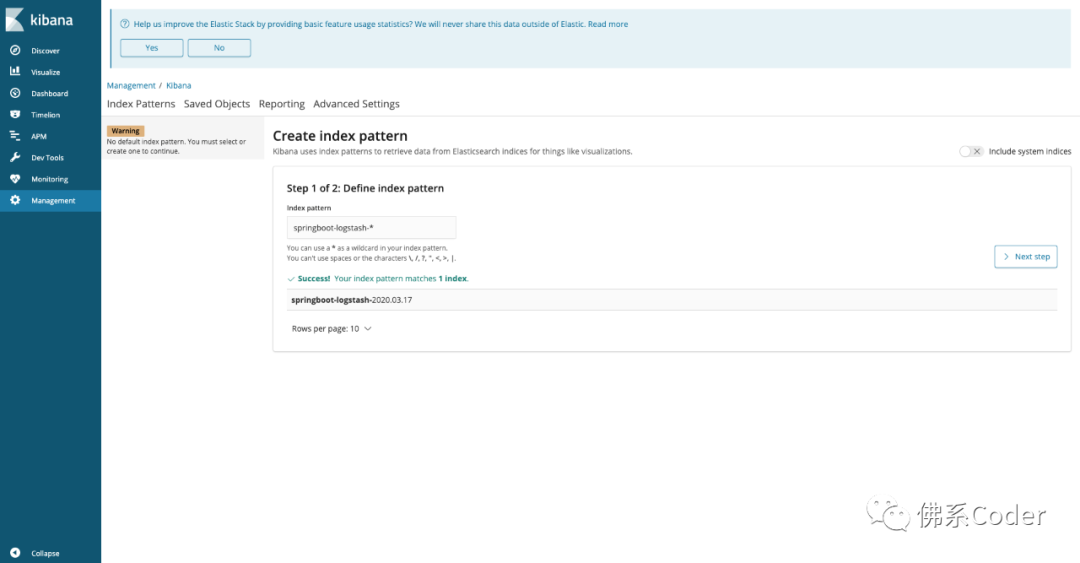

创建以下index pattern

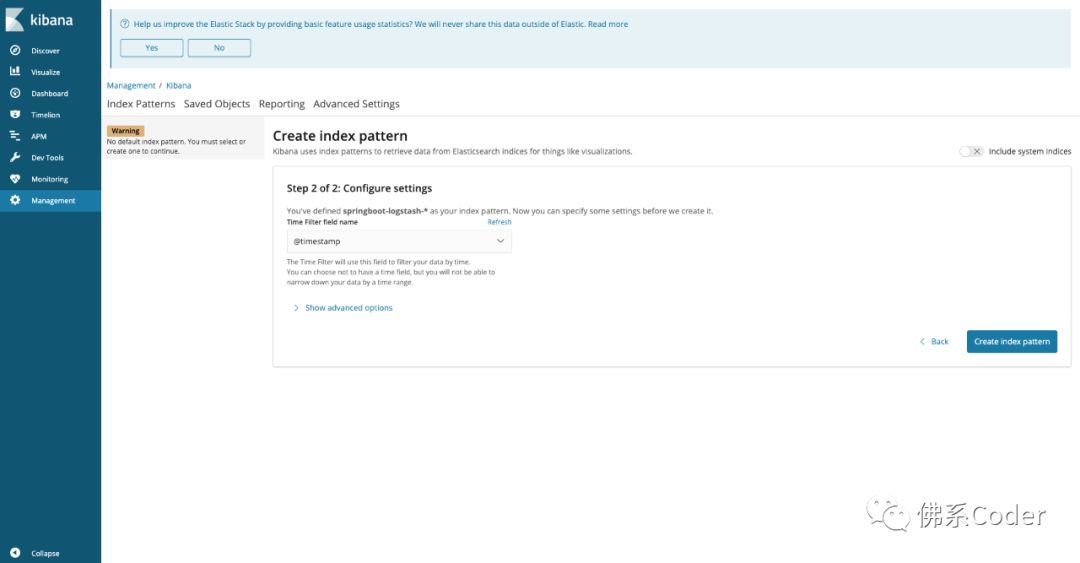

设置,选择@timestamp

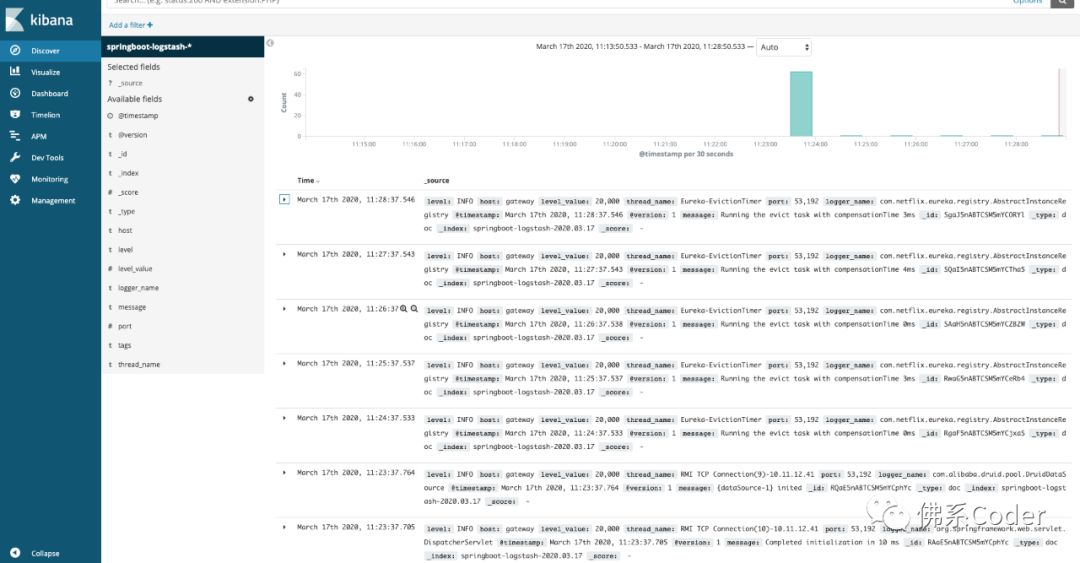

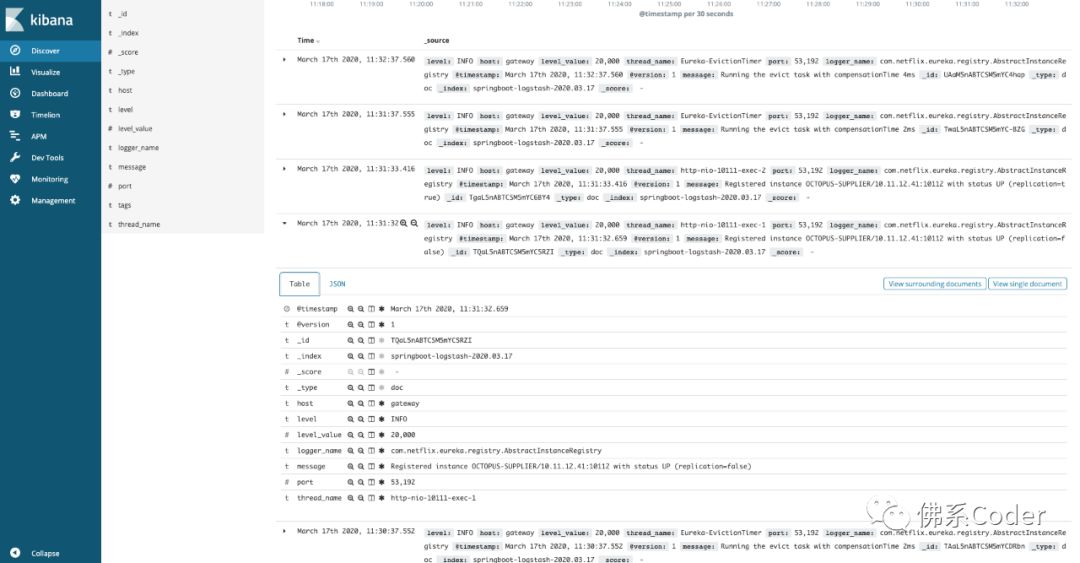

点右下角的create index pattern,然后选择菜单中的Discover,发现的确是项目的日志,已经进来了

由于我启动的是服务注册eurake,此时,我尝试将别的服务注册上来,再查询的时候发现服务注册的日志也已经上来了

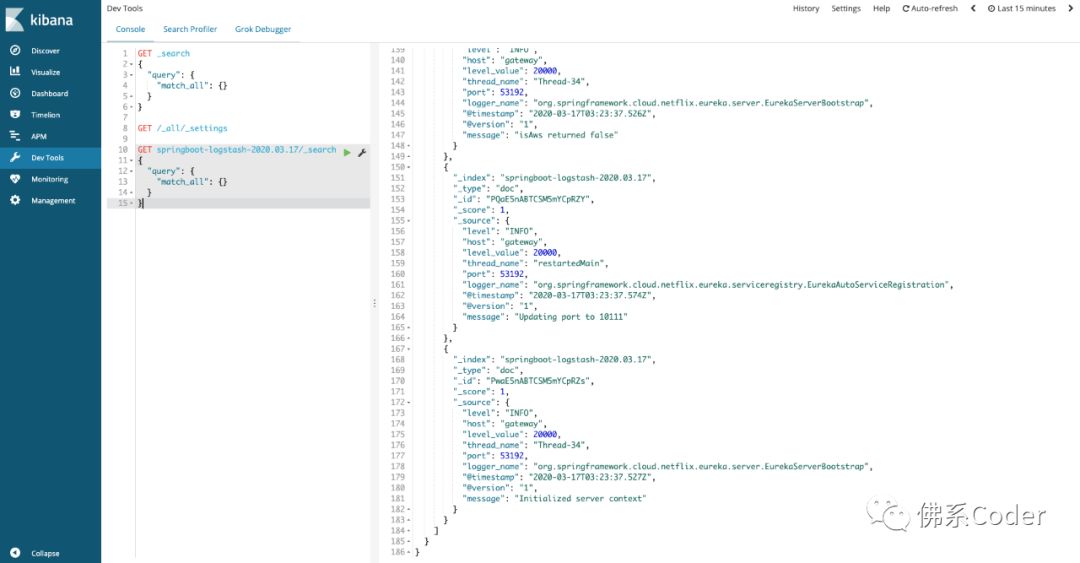

另外,熟悉es操作命令的朋友可以直接在Dev Tools上根据自己的需求写查询语句,例如:

1

2

3

4

5

6

| GET springboot-logstash-2020.03.17/_search

{

"query": {

"match_all": {}

}

}

|

**“**记录、思考、分享的小小号。

分享科研、技术、读书笔记、感触、成长、领悟。

一起分享,一起成长。 ”

如果您喜欢此博客或发现它对您有用,则欢迎对此发表评论。 也欢迎您共享此博客,以便更多人可以参与。 如果博客中使用的图像侵犯了您的版权,请与作者联系以将其删除。 谢谢 !